Too many organizations view the adoption of artificial intelligence (AI) as a box to check: hand the assignment to an internal software developer, deploy a model, and consider the task complete.

The reality is much more complex.

Realistically, many small businesses, as well as large enterprises, lack a developer on staff who has the time (or acumen) to take on a new AI project. In such cases, the responsibility to learn and implement AI often falls on an already stretched technical team that must also handle day-to-day operations.

But expecting a small tech team or engineering group to deliver business-grade AI on top of their regular workload? That is a recipe for disaster. These teams lack the space to develop their generative AI skills while maintaining the operation of the internal systems on which they work.

Unfortunately, for many businesses, the mandate to implement AI isn’t optional, so companies continue to grapple with a way to integrate AI into their operations, often under tight budgets and timelines. Their challenge is not just to adopt AI, but to do so effectively and sustainably.

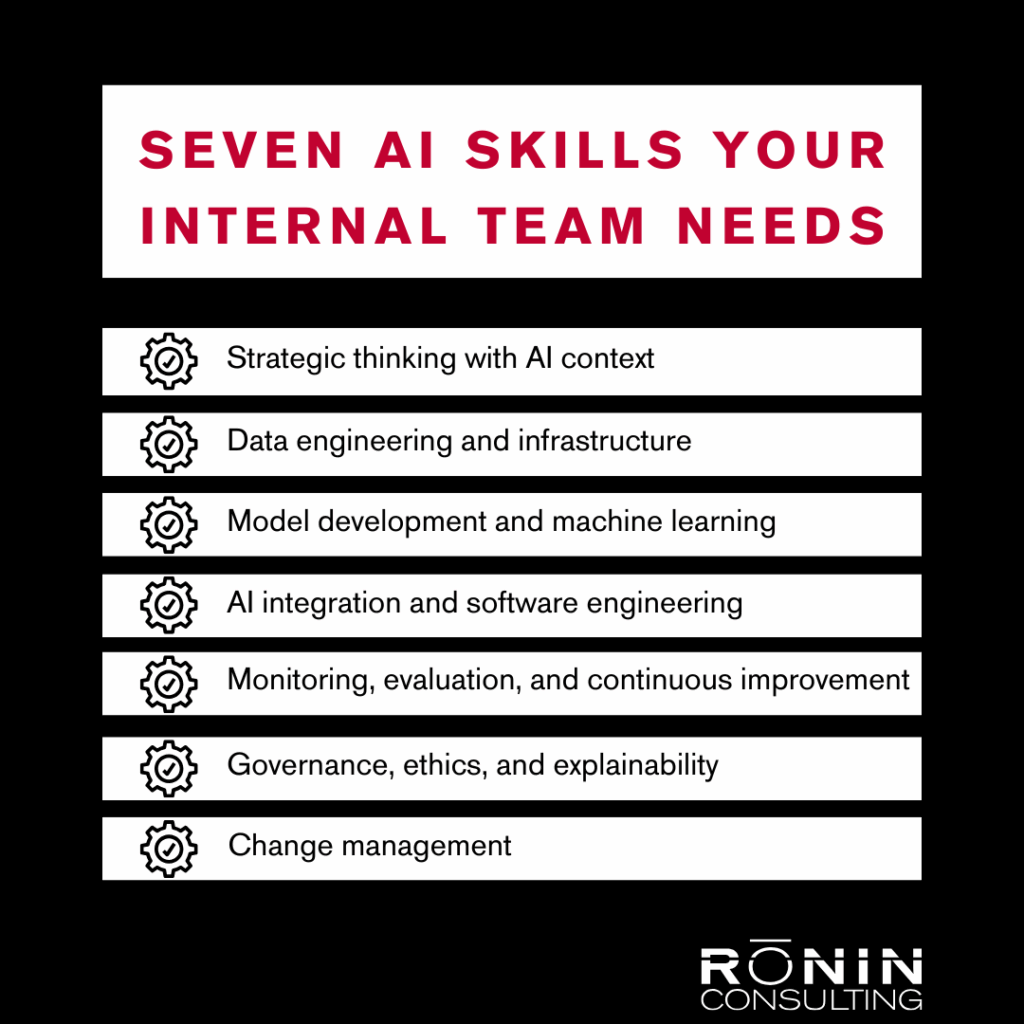

Outside of hiring an architect or AI software consultancy, you must be honest about the skills your internal team has to implement AI technology and where the gaps exist. In this article, we will discuss the critical skills necessary to successfully implement AI within your organization, encompassing data engineering, model development, governance, change management, and adoption.

Strategic thinking with AI context

Before you introduce any AI technology, you need a clear strategy. Your business must evaluate how AI supports its objectives and strengthens its competitive position. Doing this well requires fluency in both business and technology. Start by identifying practical use cases, weighing trade-offs, and aligning AI efforts directly with measurable business goals. Key skills required to manage this include:

- Use case ideation and prioritization – generating AI opportunities, validating them, and picking which ones to pursue first.

- Business metrics alignment — mapping AI outputs to revenue, cost, retention, risk, or customer metrics

- Risk and ethical foresight — anticipating model bias, fairness, privacy, explainability, and regulatory exposure

If your team lacks this, any AI project risks becoming a technical island with little connection to real business value.

Data engineering and infrastructure

Even the smartest AI model fails without reliable data. AI requires strong data pipelines and infrastructure that can support training and inference reliably. Key skills required to manage this include:

- Data ingestion and ETL — pulling data from internal systems, cleaning it, and transforming it for use.

- Feature engineering — crafting the input variables (features) that make models perform well.

- Data quality assurance — detecting nulls, anomalies, drift, leakage

A confident AI team ensures data flows smoothly and that infrastructure supports iteration without constant ops friction.

Model development and machine learning

This is where much of the AI hype lies: building or customizing models. But even in this area, success rarely comes from building a model from scratch. What matters more is understanding how to adapt existing models, fine-tune them with your data, and validate that they perform reliably in real-world conditions. The skill is less about inventing something brand new and more about knowing how to customize wisely and integrate into your business. Key skills required to manage this include:

- Algorithm knowledge — classification, regression, clustering, reinforcement, embedding, etc.

- Transfer learning and fine-tuning — adapting pretrained models to internal data.

- Hyperparameter tuning and validation — cross-validation, regularization, model selection

- Robustness and generalization — techniques to prevent overfitting and improve resilience to distribution shift

In practice, fewer teams build from scratch. Many adopt open models and fine-tune at the margins. The skill is in customizing wisely, testing thoroughly, and avoiding model brittleness.

AI integration and software engineering

You will need to wrap your model into production software. That means embedding AI into applications, APIs, user interfaces, and backends. If the model is never surfaced to users, it delivers no value. Your internal team must possess the necessary skills to build scalable services and seamlessly integrate AI outputs into existing workflows. Key skills required to manage this include:

- API development and micro services — turning model logic into consumable endpoints.

- Software design and architecture — modular, testable, and maintainable code.

- Latency, throughput, and resource optimization — making inference fast and cost-efficient.

- UI/UX integration — embedding AI output where people need it and in a way they trust.

Your engineers must understand how AI behaves under real-world load and integrate graceful failure modes and fallback logic.

Monitoring, evaluation, and continuous improvement

Deploying an model is not the end. AI needs careful monitoring and iteration. Conditions change, models degrade, data shifts, and your team must stay vigilant. The models you deploy today may look outdated within a year as new architectures and efficiency techniques emerge.

Staying competitive means building a feedback loop that not only tracks current performance but also evaluates when it makes sense to adopt advances like more efficient large language models, new retrieval frameworks, or updated deployment methods. Key skills required to manage this include:

- Performance monitoring — tracking drift in accuracy, precision, recall, or business metrics.

- Alerting and anomaly detection — catching when model behavior changes unexpectedly.

- Retraining strategy — deciding when and how to retrain, versioning models

- Feedback loops — collecting human feedback or corrections and feeding them back into the training pipeline.

Teams should adopt a lifecycle mindset, encompassing model creation, deployment, monitoring, retraining, and redeployment.

Governance, ethics, and explainability

AI raises new risks. Bias, fairness, transparency, privacy, and compliance matter. Every internal AI team must incorporate governance and ethics into the process from the outset. Strong governance will protect your business from regulatory and reputational risk and build trust with users who need confidence in AI-driven decisions. Key skills required to manage this include:

- Model explainability techniques — SHAP, LIME, counterfactuals, and attention visualization.

- Bias detection and mitigation — fairness metrics, reweighing, adversarial debiasing.

- Privacy and anonymization — differential privacy, masking, aggregation.

- Governance framework design — policies, audits, roles, documentation.

Teams that ignore these aspects risk regulatory backlash, ethical failures, or lost trust among employees or customers.

Change management

Even a perfect AI solution fails if people do not adopt it. You must drive change across teams and embed new workflows around AI. Success depends on clear communication and building trust so employees understand how AI supports their work rather than threatens it. Key skills required to manage this include:

- Communication and training — explaining AI outputs, uncertainties, and risks to non-technical stakeholders.

- Process redesign — rethinking existing workflows to incorporate AI decisions and human oversight.

- Governance of human-in-the-loop — deciding when humans override AI and how to manage handoffs.

- Performance incentives — aligning compensation, KPIs, and incentives to new AI-enabled ways of working.

The technical team often needs to partner with operations, HR, and leadership to ensure adoption and alignment.

Does your internal team have the right AI skills?

Implementing AI internally takes far more than choosing a model and switching it on. Think of it as building a stack of capabilities that work together: strategy to guide the vision, data pipelines to keep information flowing, models that can adapt, software that integrates AI into daily work, and governance to keep everything safe and ethical. Add in change management to bring people along and domain expertise to ground the technology, and you have the foundation for real success.

Miss even one of these layers, and your project faces serious risk. The better approach is to assess your current standing, close any gaps with skilled personnel or training, and start with smaller wins that demonstrate clear value. From there, you can scale into more complex systems with confidence.

Over time, your internal AI capability stops being a side project and starts operating as an engine that drives impact across the business.

Or, instead of training your team from scratch, you can partner with Rōnin Consulting through our SamurAI® services, giving you immediate access to expertise, proven frameworks, and practical support to accelerate your AI journey.

Contact us today to learn more about how we can help you with your AI challenges.

Want More Content Like This?

Join the Rōnin Recap

Get expert insights on AI, integration, and the future of software — direct from the team at Rōnin Consulting. We’ll send you the good stuff, not spam.