Three months into an AI project, someone always says it: “We’re 90% done.”

Then six weeks later, you’re still at 90%.

If you’ve lived through an AI implementation, you know this moment. The early excitement has faded, the finish line keeps moving, and the team is stuck in what feels like an endless loop of tweaks, tests, and “just one more thing.”

This is the boring middle. And it’s where most AI projects either become real or quietly die.

The boring middle is where AI projects actually live

Industry research highlights how rare smooth AI adoptions and projects really are. According to MIT’s 2025 “State of AI in Business” report, only about 5% of AI pilot programs deliver measurable business value, meaning roughly 95% fail to produce meaningful results or reach production. This sobering finding shows that success hinges less on choosing the right model and more on how well organizations plan and govern their AI initiatives.

When teams are asked why, they say “unexpected complexity” or “organizational readiness.”

What they’re really describing is the middle.

The shiny outer layer of AI (the part everyone loves to talk about) focuses on model choice, breakthroughs, and predictions about “what’s next.” But the work that determines success is far more methodical. Getting an AI system ready for real users requires steady, repetitive effort.

It’s not the part that gets applauded on LinkedIn.

It’s not the part that makes it into the marketing deck.

But it is the part that determines whether your AI feature becomes a dependable tool or just another idea that never shipped.

The boring middle includes cleaning and normalizing data you thought was ready, tightening prompts so they behave consistently across edge cases, building guardrails around unpredictable outputs, rewriting workflows that don’t match real user behavior, running comparison tests until results stop wobbling, documenting decisions made across dozens of iterations, and saying “we need another week” because the latest model update broke your original approach.

None of this is flashy. All of it is required.

These are the steps most teams underestimate, especially those implementing AI for the first time.

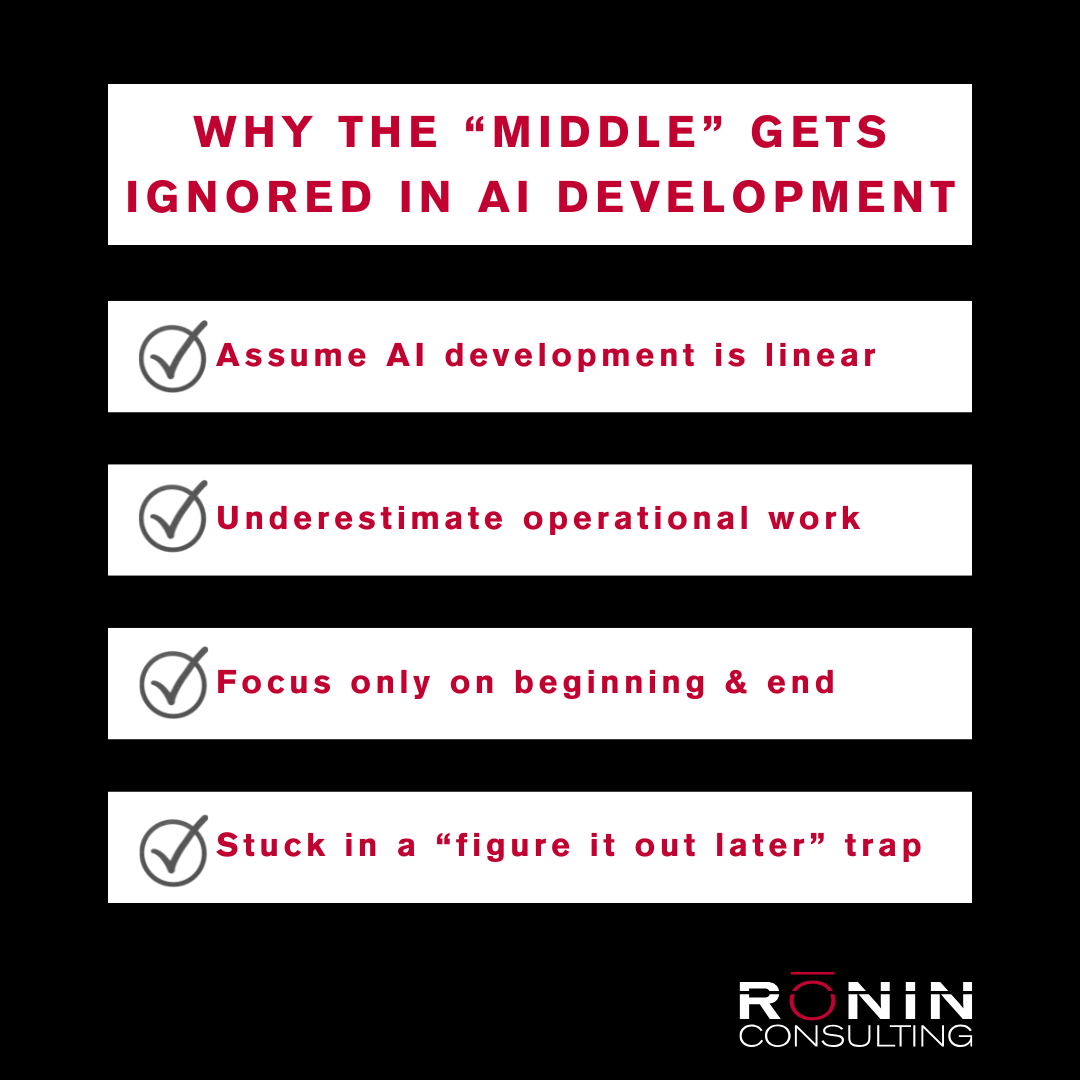

Why the middle gets ignored in AI development

Let’s face it, the middle is the place where a lot of the billable hours occur – but why is it often overlooked? It’s often because the work is repetitive and often invisible outside the core team and it’s easy for leaders to assume things are moving faster than they actually are.

But here are four reasons that explain why the middle is often overlooked:

Leaders assume AI development is linear

Most leaders expect a predictable sequence:

Experimentation → Development → QA → Launch

Even in Agile environments, each sprint is a mini linear cycle: write the code, test the code, ship the code.

AI doesn’t behave like this.

A single prompt revision, data change, or model update can send you right back to the beginning. The old build/test/ship model doesn’t map cleanly onto AI development, and that mismatch is why teams consistently underestimate the effort required.

Teams underestimate the operational work

Model choice is maybe 20% of the total effort. The real work begins when you integrate that model into existing systems—and suddenly you’re wrestling with access control, audit requirements, error handling, retraining schedules, and workflow adoption.

Everything around the model consumes the majority of the timeline. These tasks rarely make the kickoff deck, but they always define the real deadline.

Everyone loves the beginning and the end

The kickoff feels energizing. The launch feels rewarding.

The middle is different. It’s where you comb through the data, rewrite prompts for the third time, and deal with issues no one anticipated in week one. Progress becomes less visible. Standups feel repetitive, and Leadership begins to wonder whether the project is worth the investment.

And yet, this is the part that actually makes the launch possible.

The “we’ll figure it out later” trap

It starts with good intentions. Everyone is excited and wants to move forward, so foundational questions get pushed aside: Who owns what? What does “done” look like? How will we validate accuracy? How will this integrate into real workflows?

Those deferred decisions always come back in week six, when the team is already tired, and the pressure is real. Meetings multiply. Rework expands. The project gets stuck in the familiar cycle of being “almost done” for weeks at a time.

The last 10% stretches endlessly because the first 10% was never defined.

Teams who ship AI projects look different

Teams that consistently deliver AI (and not by luck) don’t underestimate the middle.

They scope tightly, choosing the smallest reliable feature they can ship with confidence. They treat prompts like software, versioning and reviewing them. They accept the feedback loop instead of pretending the work is linear. And they document relentlessly, so today’s decision doesn’t become tomorrow’s mystery. The teams that embrace this mindset move more predictably and experience far fewer last-minute surprises.

The boring middle is the difference between ideas and outcomes

Here’s the truth: AI rarely fails because the model can’t do something. It fails because the systems, workflows, policies, and data structures around the model weren’t designed to support it.

The middle is where assumptions get tested, workflows get simplified, prompts become dependable, and confidence finally replaces guesswork.

When teams invest in this part, launches feel steady. It feels almost anticlimactic. But when they skip it, the project spirals into fire drills and the painful sense that everything is “just one more week” away.

If you’re building anything AI-related, start here

Before you write your first prompt or pick your first model, get honest about the middle.

Ask the questions that actually determine whether your project ships: Who owns prompt behavior when it changes?

- Who validates accuracy?

- How often will we retest after model updates?

- What assumptions are we making about our data?

- What’s the smallest dependable scope we can deliver?

- Do we know what “good enough” looks like?

The more clarity you build up front, the smoother everything else becomes. The teams winning at AI aren’t the ones with the best models—they’re the ones who’ve learned to love the boring middle.

So before your next AI kickoff, ask yourself: Are we planning for the exciting parts… or the parts that actually matter?

When You’re Ready to Ship, Rōnin Can Help

Most teams implementing AI run into the same challenges. The difference is that the successful ones know how they handle the middle.

At Rōnin Consulting, we scope AI projects realistically, define the operational requirements early, and guide teams through the repetitive but necessary work that makes a launch stable, not fragile.

If you’re planning an AI project and want a partner who understands how to get it shipped, reach out to the team, and we’ll help you navigate the middle and deliver something that actually works.