Project Description

A leading behavioral healthcare company approached us to help solve challenges with processing insurance claims. Business growth created unforeseen problems, and they needed help keeping up with the volume of claims to be processed and balancing the workload effectively across their claims processing teams.

This client wanted a cloud-first, custom application to provide a rules-driven claims assignment and management solution. This custom application would solve two problems: boost claims throughput and give managers better insights into the completed work.

Technologies Used

Infrastructure

- Azure Functions

- Azure Data Factories

- Azure SQL Server

Server-Side

- .NET Core

- Entity Framework

- Dapper

Front-End

- Angular

- PrimeNg

- Sass

Management

- Azure Biceps

- Azure Monitor

- Azure DevOps

Challenge

The client’s collections team leverages its third-party electronic medical record (EMR )to process insurance claims for payment. However, the EMR does not provide tools to prioritize, assign, and monitor the work of the collections agents. A solution was needed to gather the open claims from the EMR, then automatically prioritize and assign them to agents. Claims team managers needed insights into the status of claims and the productivity of their teams—the system aimed to process more claims without increasing the number of claims agents.

Our Approach

We worked closely with the collections team to understand their pain points and how the custom application could streamline and optimize their day-to-day work. Requirements were gathered and written as User Stories for the developers to work from. We identified two types of users. Collections agents and their managers and each needed different capabilities:

Team Managers

- Prioritize and re-prioritize the claims that needed to be worked.

- Define rules for assigning claims to users.

- Forecast claims balances, expected revenue, and the total number of claims within a period.

- At-a-glance dashboards that quickly provide a view of the teams’ productivity.

Collections Agents

- A work queue that shows the claims to be processed and in what priority.

- The ability to update the status as the claims are being worked on and completed.

- The ability to split a single claim containing insurance and patient-pay charges into multiple claims that can be worked in parallel by multiple claims agents.

User Experience

Ease of use was an important consideration for the application. With the help of the client’s subject matter experts, we designed screens that were intuitive and easy for the users to learn. The user interface (UI) includes screens for the claims processors to manage their assigned work and screens for the managers to view and redistribute work items. Custom dashboards provide managers with an at-a-glance overview of team productivity.

Angular was selected as the UI technology, and the UI runs in an Azure App Service. The client’s existing Azure Active Directory authenticates users.

Systems Integration and Data Management

The new application must access claims data from the EMR without negatively impacting the EMR’s performance. We created a database independent of the EMR and tailored its data model to the application’s functional and reporting requirements to accomplish this.

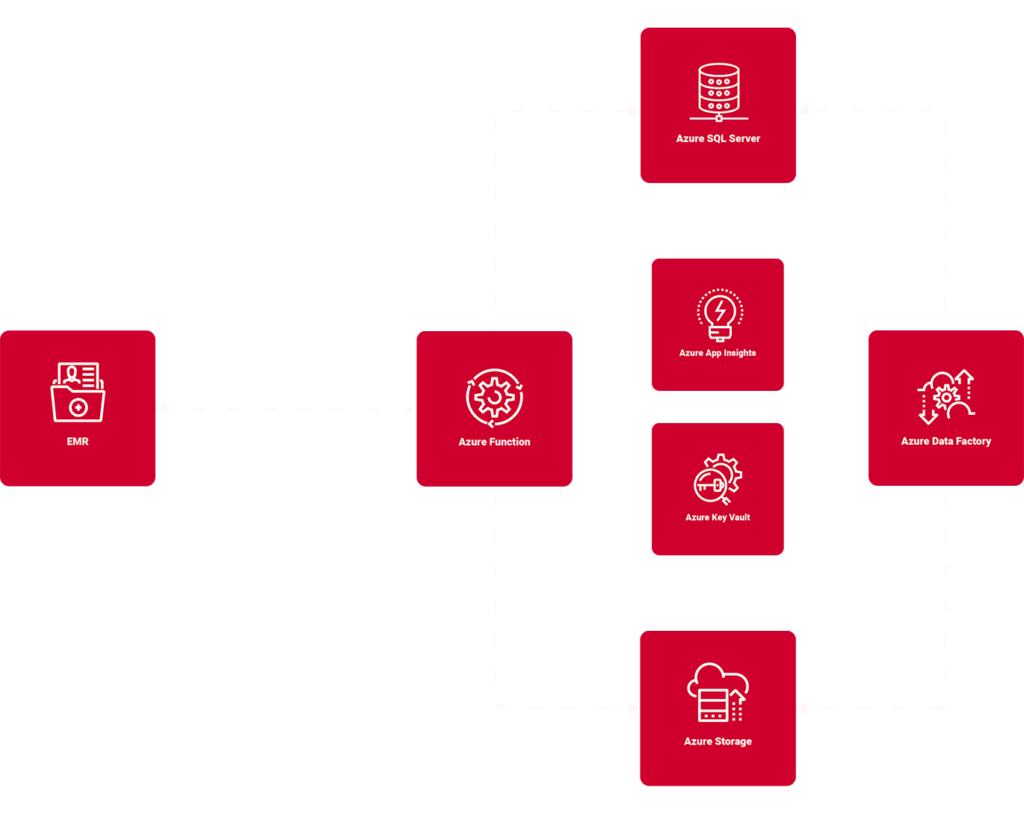

Managing data sourced from a third-party system entails capturing and processing the ongoing data updates within that system. To account for this, we utilized Azure Functions and Azure Data Factories to extract, translate and load the EMR data into the new database.

An Azure Function with a timer trigger makes nightly requests via the EMR’s APIs. The EMR responds with a JSON payload containing claims data updated since the last run of the interface. The Azure Function takes the JSON payload, creates a CSV file, and drops the files into Azure Blob Storage. The CSV is transformed into a relational format and stored in an Azure SQL database.

Once the data load is complete, a second Azure Function runs and uses a custom-developed rules engine to prioritize the work and assign the claims to agents. For example, agents that work with a particular insurance company are automatically assigned only claims for that insurance provider. Before the new system, someone manually assigned these claims, which was time-consuming.

Managing the Hosting Environments

When using Azure services, it’s necessary to secure configuration and authentication information. We leveraged Azure Key Vault for this. Azure Key Vault safely stores information while making it securely accessible to the application’s services.

To help make deployments easy, consistent, and reliable, we used Azure Biceps to manage the Azure resources needed in each environment (development, quality assurance, and production).

Azure’s Application Insights is integrated with the application to provide detailed information to the client’s IT team about the application’s performance and reliability.